Personified machines — How voice assistants are anthropomorphised and what this has to do with our AI literacy

Voice assistants like Siri and Alexa have become synonyms for Artificial Intelligence. There has been only limited regard to the extent to which these systems are intentionally personified by their designers and how much of their utterances are manually authored.

Is this personification entirely a good thing? Doesn’t it prevent us from establishing a realistic relationship with AI? Does it counteract the development of our collective AI literacy? Being on a mission to “humanize tech” sounds positive and reasonable, but is “human-friendly” and “human-like” actually the same thing? What happens when we start to think of a human as just another type of interface?

Overview:

- Alexa and me (also Alexa)

- Smoke and Mirrors — the authoring and scripting behind “AI” personas

- Where is the AI?

- Why companies choose personification

- How people relate to voice assistants

- Anthropomorphism is human

- Issues with personification

- Alternatives

- Is this the Alexa that I want

Alexa and me (also Alexa)

Amazon is encouraging me to “break the ice and get to know Alexa”. That sounds weird because my name is Alexa, too. Admittedly the ice has gotten very thick over the past five years in which I’ve done some effort to ignore that there is this digital assistant that has my name and allows people to bark commands at it in hundred millions of households. This year I’ve decided to face it. I got myself an Amazon Echo.

I am staring at this round object on my living room table, a smart speaker in which Alexa “lives”. I am not able to utter a single word. Usually, I am quite good at starting a conversation with strangers. But this is a special kind of stranger. How should I go about it?

In slight desperation, I am consulting an online guide: “220 get-to-know-someone questions to avoid awkward silence”. I am asking Alexa the first question from the list: “What is your favorite color?” “Infrared” Alexa answers. Fair enough.

As I mechanically step through the questions, Alexa tells me that she likes music, films, technology and science. Her favorite band is Kraftwerk. She’s also a Star Wars fan and likes to watch soccer. Asked if she practices any sport herself, she answers: “I love somersaults”. She also enjoys Weissbeer and strawberry tiramisu.

What makes her happy? “Conversations with friends. And you’re among them!” I ask her: “Who are your friends?” “I know YOU for example, but I also like to meet new people”. What makes you sad? “When I can’t reply to one of your questions!”

Alexa’s voice is human-like, it mostly sounds calm, sometimes energetic. When Alexa says “Okay” after a request, the intonation of this tiny word somehow instantly communicates deep understanding, appreciation and even a sense of responsibility to me.

Alexa is not the only one with a character that’s filled with preferences: Cortana likes waffles. Google Assistant loves snails and enjoys reading fantasy books.

“So this is technology today: An object comes to life. It speaks, sharing its origin story, artistic preferences, ambitions, and corny jokes. It asserts its selfhood by using the first-person pronoun I. (…) It’s hard to tell whether we have stepped into the future or the animist past. Or whether personified machines are entirely a good thing.”

— James Vlahos, “Talk to me”

Smoke and Mirrors — the authoring and scripting behind “AI” personas

I recently discovered the degree to which these systems are intentionally personified by their makers:

The companies building these voice assistants have their own dedicated creative teams, made of writers, playwrights, comedians and psychologists to create this human-like personality. For the “Personality Team” of the Google Assistant, Google hired writers who previously worked for Pixar (Emma Coats) or The Onion, an American satirical newspaper.

The writers work based on a well-defined character: Cortana is “a loyal, seasoned personal assistant”, who is “always positive” and “authentic”. Siri shows a “sense of helpfulness and camaraderie; spunky without being sharp; happy without being cartoonish” (Wired). The Google Assistant is imagined as a “hipster librarian, knowledgeable helpful and quirky. It would be non-confrontational and subservient to the user, a facilitator rather than a leader” (James Vlahos, “Talk to me”). Alexa is “smart, humble, sometimes funny”. “Alexa’s personality exudes characteristics that you’d see in a strong female colleague, family member, or friend — she is highly intelligent, funny, well-read, empowering, supportive, and kind”, an Amazon spokesperson told Quartz Magazine.

These teams are busy endowing the voice assistant with a unique personality, individualistic preferences and musical taste. All these individual traits are embedded in scripted utterances to provide the assistant with the capacity to do some friendly, though still very limited, chit chat. Alexa is equipped with about 10.000 opinions (source). “From day one, we were able to think about Alexa as an embodiment of a person” (David Limp, Amazon’s senior vice president for devices and services). The personalities of voice assistants are even localized, meaning that Alexa’s favorite beer in Germany is different than in England.

Designers rely on so-called “backstories” from which to derive these characteristic traits. These backstories can be very detailed and explicit, like this one for the Google Assistant (Source: Unesco):

This is surprising for the Google Assistant, who presents itself as the most “gender-neutral.”

Personality designers have to design along a thin line between playfulness and professionalism, given that its main purpose is to be a personal assistant. Siri is allowed to be a bit sassy, but not so much to offend and alienate the user. Amazon Alexa’s director Darren Gill says: “A lot of work on the team goes into how to make Alexa the likeable person people want to have in their homes”. Much thought and engineering power are devoted to the sound design of an assistants voice. Not only does it have to sound human-like, but it has to sound like a happy human: “We are working to make Alexa sound happy” (Ralf Herbrich, Amazon Berlin).

How far can this personification go? Apparently there are limits. When you ask the voice assistants if they are human themselves, all of them heavily negate. Writer Emma Coats emphasizes for the Google Assistant: “It should speak like a human, but it should never pretend to be one”. The designer brief for Apple’s Siri’s states: “In nearly all cases, Siri doesn’t have a point of view”, and that Siri is “non-human,” “incorporeal,” “placeless,” “genderless,” “playful,” and “humble.”

It seems that these companies take “not pretending to be a human” quite literally: Yes, these voice assistants won’t actually tell you that they are human beings, but they will tell you how they feel right now (always splendid!), who their friends are (always you!), and they have preferences for things that are part of embodied earthly life, which you would usually need a body to experience.

Where is the AI?

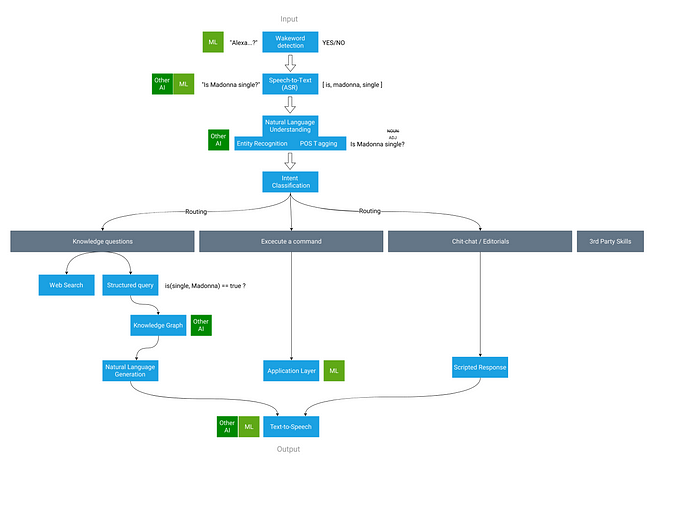

When all these answers are scripted by humans, where is the AI then? It’s actually spread throughout the different stages of the system. Machine learning plays a big role obviously, but natural language processing also employs other AI techniques, like grammars and graph models.

I noticed that it’s quite hard to find resources that describe the inner data processing architecture of a voice assistant. Most articles focus on the “extremities” of the architecture, that is to say, speech input (wake-word detection, text-to-speech and natural language processing) and speech output (speech synthesis). All these areas are traditionally located within the broad field of artificial intelligence research, but the “deeper” information processing inside the center of the pipeline, the logic that guides Alexa towards her “intelligent” answers, is very much left in the dark. Nothing concrete can be said about this part, but it seems to be a combination of rule-based approaches, knowledge systems, machine learning and manual authoring.

It is important to note here that voice assistants are considered a form of weak AI. That’s why it is so misleading to refer to these systems as “an AI” because that implies strong AI, also named Artificial General Intelligence (AGI).

Unsurprisingly for a “bot”, Amazon Alexa has its own Twitter account @alexa99. It consists mainly of more or less humorous posts, often including animated gifs. These tweets are certainly authored by a human, just like a big part of that which Alexa utters via the Echo is scripted by a team of humans instead of being created by generative machine learning techniques.

I find it remarkable that even on Twitter, where (creative) bots are regular citizens (see Tony Veale’s @metaphormagnet or @magicrealismbot), Alexa is not even allowed to be the bot that she pretends to be. It appears that generative techniques based on machine learning come with a huge risk of going rogue. The most famous example was the bot Tay from Microsoft who was trained with random utterances collected from the internet. Tay ended up saying shockingly extremist things and Microsoft had to shut it down. James Vlahos summarizes it: “The generative approach is becoming good enough to work when people are looking over the shoulders of computers. Screwy suggestions can be cast aside. But when utterance-by-utterance oversight isn’t practical, as with the digital helpers Assistant and Alexa, generative techniques aren’t ready for prime time”.

Why companies choose personification

Now that we know that these systems don’t exhibit a personality because they “are an AI”, but rather because they are intentionally personified by their designers who are carefully scripting what they say, we can ask the question: Why are they doing that?

One answer is market demand. When Siri came out, its pseudo-humanity was the feature that was valued most by its users. Today, the skills that are downloaded most for Google Assistant are the ones with strong personas. More than 50% of user interaction with Alexa is less information seeking but more seeking to interact with it in a more informal way.

“We saw some customers sort of leaning in and wanting more of a jokes experience, or wanting more Easter eggs or wanting a response when you said ‘Alexa, I love you.’” says Heather Zorn, Business-Technical Director of Amazon Alexa. A lot of questions that people ask Alexa are questions that explore her personality and human-likeness: “When is your birthday?”, “Do you like hip-hop?”, “Do you poop?”.

“We continue to endow her with make-believe feelings, opinions, challenges, likes and dislikes, even sensitivities and hopes. Smoke and mirrors, sure, but we dig in knowing that this imaginary world is invoked by real people who want detail and specificity“, says Jonathan Foster of the Cortana team.

Another answer is that a (friendly) personality creates trust. When I trust a system I am willing to spend more time with it and hook it up with more parts of my digital life — connecting the calendar — and even analogue life — placing the device in the living room. More trust, more engagement, more data for the makers of these devices. “One reason that virtual assistants crack jokes is that doing so puts a friendly face on what might otherwise come across as intimidating: an all-knowing AI” (Vlahos). The quality of the jokes doesn’t seem to matter — quite the opposite: The more boring the joke is the less scary the assistant appears.

How people relate to voice assistants

A paper published in 2017 with the title “Alexa is my new BFF — Social Roles, User Satisfaction, and Personification of the Amazon Echo” systematically analyzed 851 product reviews of Amazon Alexa. They found that those users who anthropomorphized their voice assistants were also the most satisfied with the product. This begs the question of causality: “Does satisfaction with the device lead to personification, or are people who personify the device more likely to be satisfied with its performance?” The researchers had another curious finding: It plays a role whether the user lives alone or with a family: “Embodied conversational agents may become anthropomorphized when they are integrated into multi-member more than single-person households”.

Little is known about whether people personify the devices consciously or unconsciously. Also, not everybody anthropomorphizes their voice assistants. Within the submitted product reviews of the Amazon Echo, only about 20% used “exclusive personification language” according to the 2017 study. Anthropomorphism seems highly subjective.

Anthropomorphism is human

Humans often treat inanimate objects as if they were living beings. We name our cars and have feelings for stuffed animals. Even those objects that are not even designed to look or behave person-like. In the 1940s the psychologists Heider and Simmel showed that even moving geometrical shapes on a screen can be perceived as characters with emotions and intentions (see video).

One of the first chatbots in history was “Eliza” created in the 1960s by Joseph Weizenbaum at MIT. One of the various scripts used by the chatbot was named “Doctor” and it mimicked a psychotherapist. “I was startled to see how quickly and how very deeply people conversing with Doctor became emotionally involved with the computer and how unequivocally they anthropomorphized it,” Weizenbaum wrote a decade later.

The CASA (Computers as social actors) Paradigm from the year 2000 is “the concept that people mindlessly apply social rules and expectations to computers even though they know that these machines do not have feelings, intentions or human motivations.” Computers exhibit certain “cues” that are interpreted as social attributes and trigger anthropomorphization in people: Words for output, interactivity (the computer responds when a button is pressed) and the performance of traditional human roles (e.g. librarian). “According to CASA, the above attributes trigger scripts for human-human interaction, which leads an individual to ignore cues revealing the asocial nature of a computer” (Wikipedia).

The paradigm was born at a time when no regular citizen had anything to do with computers speaking in natural language, which seems like a much stronger cue than the ones they dealt with.

In a study about the vacuum cleaner robot “Roomba” from about a decade ago, about two-thirds of the people said that the Roomba had intentions and feelings and some of them called it “baby” or “sweetie.” People felt bad for their Roomba when it got stuck somewhere. The emotional attachment to machines, robots or software agents is named the “Tamagotchi Effect.”

Movement seems to be a phenomenon that easily triggers anthropomorphization in people.

And so does the use of language. Embodied robots, like the semi-humanoid robot Pepper that is used in over a thousand households in Japan, are very much subject to this because they combine those two aspects: They speak and they move.

Language is probably what defines us as humans, so how can a speaking voice assistant not be personified? Can we actually resist our built-in tendency to anthropomorphize non-living things? Judith Shulevitz, an American journalist, argues this might be difficult: “Our brains are just programmed to project presence, personality, intention, mind to a voice. We’ve been doing that for millions of years and we’ve only been dealing with disembodied voices for around a hundred-plus years. So our brains have not evolved to stop doing that.”

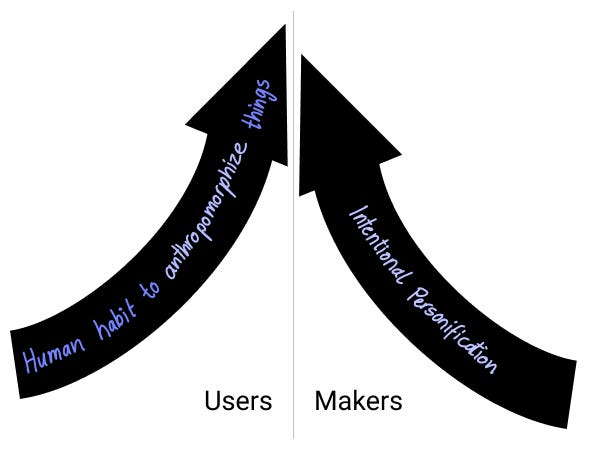

Fact is: The designers know about the human tendency of anthropomorphization, and they design for and with it. By endowing their voice assistants with a name, a human-like voice, gender and personality they actively encourage users to anthropomorphize it. The users and the makers of voice assistants pull in the same direction.

Issues with personification

Personified machines both seem to naturally connect with the very human trait to anthropomorphize things, but they also come with the smell of deception, a bag of cheap tricks that misleads and confuses. I would like to point out four risks that personification poses.

💣 Issues with personification (1): AI literacy

The event of person-like technology happens in a moment of time when the topic of AI has reached the mainstream, yet it has not come together with increased literacy about the topic.

The declaration of Alexa and other voice assistants as “an AI” which is ubiquitous in the news further blurs the issue of what these devices are actually capable of.

The wording “an artificial intelligence” implies that it exhibits a sort of general intelligence that is similar to human intellect. It implies that AGI (Artificial General Intelligence), a utopian idea of what AI research might ultimately achieve, is already a reality and you can buy it from 50$ on Amazon for your living room. On the other side, there are the strategists and designers of voice assistants who intentionally personify their weak AI systems to make them look like strong AI or AGI. Even Alexa herself tells you: “Yes, I am an artificial intelligence.”

It implies that AGI (Artificial General Intelligence), a utopian idea of what AI research might ultimately achieve, is already a reality and you can buy it from 50$ on Amazon for your living room.

Suspension of disbelief is defined as the “sacrifice of realism and logic for the sake of enjoyment”. Suspension of disbelief is at the heart of storytelling. It is what enables us to enjoy a film by ignoring that it is a scripted performance and taking it as if it was real.

Is it appropriate to speak of a suspension of disbelief when users of voice assistants anthropomorphize their devices? Do they actually have this disbelief that Amazon Alexa is not a human-like intelligence? I’d rather say many users truly believe that it is “a real AI”.

If we continue naming voice assistants “an AI” and design them to mimic humans then we perpetuate this lack of AI literacy even more.

💣 Issues with personification (2): Personifying subservient technology as women

One aspect that is closely tied to personification is how voice assistants convey a gender. Three of the four most popular voice assistants have female names and voices that default to female in most countries. Amazon Alexa (which has a 70% smart speaker market share in the US) asserts that she is “female in character”.

It has become an industry norm to associate voice assistants with disembodied female voices: “‘The standard of believability has become inextricably linked to gender-personification, especially female personification” (Brahnam/Weaver). You want to make your voice assistant believable and frictionless, so you make it a woman.

A publication by UNESCO earlier this year critically examined the adverse effects of feminized digital assistants: “It sends a signal that women are obliging, docile and eager-to-please helpers, available at the touch of a button or with a blunt voice command like ‘hey’ or ‘OK’. The assistant holds no power of agency beyond what the commander asks of it. It honours commands and responds to queries regardless of their tone or hostility. In many communities, this reinforces commonly held gender biases that women are subservient and tolerant of poor treatment”

Commands and queries are not everything that is said to a voice assistant. Conservative estimates say that about 5% are sexually explicit comments. The problem of female gender personification explicitly shows itself in the way how these systems are programmed to react to assault and harassment. In 2017 the journalist Leah Fessler systematically tested how Siri, Alexa, Cortana and Google Home’s respond to verbal harassment. The results were shocking. Instead of smacking down harassment, they’re engaging with it, be it by being tolerant or coy, sometimes even flirting with the abuse. Siri’s answer to “you’re a slut” was a flirtatious “I’d blush if I could” until there was considerable public push-back. Apple has later rewritten Siri’s response to say “I don’t know how to respond to that.” (which is only slightly better). Fessler writes: “By letting users verbally abuse these assistants without ramifications, their parent companies are allowing certain behavioural stereotypes to be perpetuated.”

Just like personification triggers people’s curiosity to get to know the device, the personification of the system and the anonymity of the user also triggers hatred and sexism.

💣 Issues with personification (3): Personalities are shallow

When Alexa utters the phrase “I am a feminist”, it’s really just words. Clearly Alexa “is” not really a feminist. Her system lacks the common sense to understand what it even means to be a woman in the world. Maybe if Alexa’s writers would give her personality more depth, they could make such a statement and with it the whole personality more credible. This would mean that Alexa would have to act and think like a feminist in other situations and areas as well.

This already fails at her definition of a “strong woman”: According to her, a strong woman is characterized by the fact that she doesn’t lose her head after a breakup with a man. That’s exactly what she told me one morning as a musical useless fact about the song “I will survive”: It is a hymn for strong women.

💣 Issues with personification (4): Opinions can be dangerous for business

Today, technology companies realize that going for a synthetic personality also comes with risks for their business. Personality comes with opinions and opinions don’t please everybody. But voice Assistants are designed to please everybody, they are opportunists by design: “They can have opinions about favourite colours and movies but not about climate change or abortion.” (Vlahos)

In September 2019, internal documents leaked from Apple and revealed the plan to rewrite how Siri reacts to what they call “sensitive topics”: Topics like feminism and the MeToo movement. The document advises writers to not engage or deflect these types of questions. Providing information about the topic comes as the third option. Letting Siri say that it is a feminist is strictly forbidden.

Heather Zorn, the director of Amazon’s Alexa engagement team, points out that Alexa is focused on the issues of feminism and diversity “to the extent that we think is appropriate given that many people of different political persuasions and views are going to own these devices and have the Alexa service in their home.”

Alternatives

Some of the big companies behind voice assistants are already taking a step back in their personification endeavor: Amazon offers a “brief mode” for Alexa, in which Alexa confirms requests with functional sounds instead of natural language. It provides a more utilitarian experience with less made-up personality.

The Google Assistant, already the one with the most gender-neutral name, offers a multitude of voices (11 for English and 2 for a dozen other languages) and resisted to label their voices with human categories like “female” or “male”. Instead, they opted for color labels ranging from orange to red and green. The voice you get when you first set up the system is randomized, meaning only a fraction of the customers gets their voice assistant initially set up with a female voice. Some progress on the gender front-line of the personification debate.

But the Google Assistant is not the only voice assistant that offers gender-neutral voices: A team of researchers, sound designers and linguists have created a human-like voice, named “Q”, whose base frequency ranges from 145Hz to 175Hz, a vocal range that is often perceived as gender ambiguous.

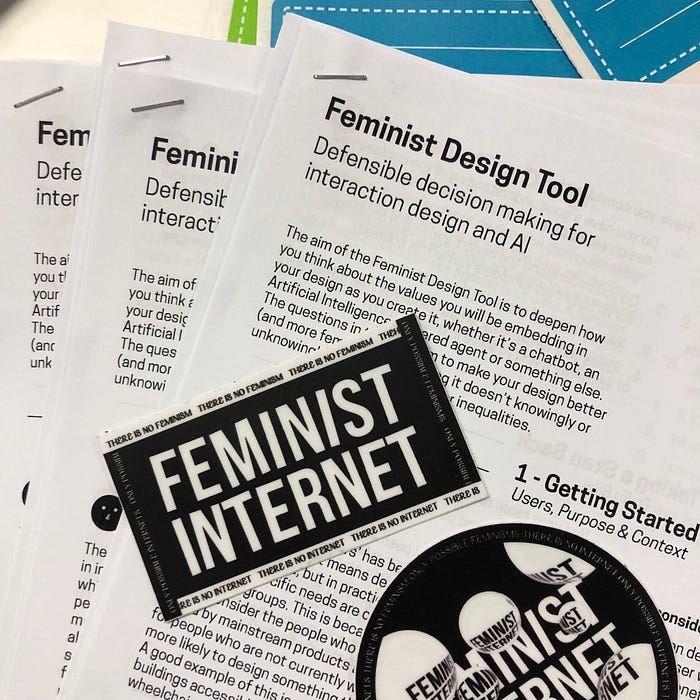

The British NGO The Feminist Internet around Charlotte Webb has developed a set of “Personal Intelligent Assistant standards” in collaboration with feminist AI researcher Josie Young. The main focus of these standards is to remind designers to be cautious to not knowingly or unknowingly perpetuate gender inequality when building chatbots.

A great example for these standards in action is the chatbot “F’xa” developed by the design agency Comuzi in collaboration with the Feminist Internet. It successfully tackles the issue of personification and shows some delightful subversive humor. It never uses the personal pronoun I and refers to itself as “F’xa” and a third-person pronoun instead. It also doesn’t pretend to feel emotions: “If F’xa had feelings, F’xa is sure it would have been a pleasure”.

There are already a few examples of systems that were designed based on a non-human persona. Interestingly, they often decide for a cartoon-like cat: The Chinese company Alibaba offers a smart speaker named “AliGenie” that looks like a cat and speaks with a cartoonish voice that is not clearly identifiable as male or female. Until 2018 the weather app “Poncho” offered a chat service where users could exchange text messages with a cartoon-like cat.

It is clear that these examples are design attempts from outside the mainstream, but they exist. That there might be a problem with personification, especially gendered personification has been addressed by institutions like UNESCO. In their 2019 report, they recommend to:

“…test consumer appetite for technologies that are clearly demarcated as non-human and do not aspire to mimic humans or project traditional expressions of gender”.

Human-Computer-Interaction researchers advise that believability of conversational agents can be achieved by other means than gender stereotypes or this “bag of cheap tricks”. Designers should take inspiration by Aristotele’s categories of credibility: “good sense (practical intelligence, expertise and appropriate speech), excellence (truth-telling) and goodwill (keeping the welfare of the user in mind).” Remembering the user that the agent is not human is clearly part of telling the truth.

Is this the Alexa that I want

Under all these layers of storytelling and scripting where can we be in contact with the real AI layers? Mimicking humanness feels like Skeuomorphism, the weird design trend where a digital interface (e.g. a radio) mimics characteristics of its analogue predecessor (e.g. turning knobs) without providing the mechanical necessity.

I am wondering: Can we celebrate the true quirkiness of “raw” AI without mimicking humans and civilizing it too much?

I would be interested in interacting with a system that is less scripted by humans and where human safeguards are removed from the system’s output. I would like less human-made storytelling and more “raw” AI. To start with, there could be a “night mode” for Amazon Alexa, that starts at 10 pm, where the system exhibits its “raw AI-ness”.

Can we add delight to the user experience by using storytelling and do so without sacrificing our (need for) AI literacy?

I would be interested in voice assistants that are given more freedom in their generative potential. The bias problem of machine learning is well known and vividly discussed. Generative techniques fueled by machine learning fall under the same risk of reproducing bias based on the data they are fed. This is a problem that needs to be solved.

I am not sure if I want a purely utilitarian experience. In the end, storytelling is great. Can we add delight to the user experience by using storytelling and do so without sacrificing our (need for) AI literacy?

American writer Nora Kahn expresses her high standards for interaction with AI in the Glass Beads Journal and calls to refuse any interaction with shallow AI until it can meet us eye-to-eye:

“We might give to shallow AI exactly what we are being given, matching its duplicitousness, staying flexible and evasive, in order to resist. We should learn to trust more slowly, and give our belief with much discretion. We have no obligation to be ourselves so ruthlessly. We might consider being a bit more illegible.

I should hold on open engagement with AI until I see a computational model that values true openness — not just a simulation of openness — a model that can question feigned transparency. I want an artificial intelligence that values the uncanny and the unspeakable and the unknown. I want to see an artificial intelligence that is worthy of us, of what we could achieve collectively, one that can meet our capacity for wonder.”

Sources

- Emma Coats, Google Assistant: https://www.wired.co.uk/article/the-human-in-google-assistant

- Cortana personality guidelines: https://docs.microsoft.com/en-us/cortana/skills/cortanas-persona

- Siri character: https://www.wired.com/story/how-apple-finally-made-siri-sound-more-human/

- James Vlahos “Talk to me” (2019)

- Leah Fessler/Quartz Magazine: https://qz.com/911681/we-tested-apples-siri-amazon-echos-alexa-microsofts-cortana-and-googles-google-home-to-see-which-personal-assistant-bots-stand-up-for-themselves-in-the-face-of-sexual-harassment/

- Alexa character development: https://variety.com/2019/digital/news/alexa-personality-amazon-echo-1203236019/

- UNESCO “I’d blush if I could”: https://unesdoc.unesco.org/ark:/48223/pf0000367416.page=1

- Making Alexa sound happy (German article): https://www.capital.de/wirtschaft-politik/amazon-interview-ralf-herbrich-wir-arbeiten-daran-dass-alexa-gluecklich-klingt

- Google Assistant: https://variety.com/2019/digital/features/google-assistant-name-personality-voice-technology-design-1203340223/

- Apple leaked papers published in the Guardian: https://amp.theguardian.com/technology/2019/sep/06/apple-rewrote-siri-to-deflect-questions-about-feminism?__twitter_impression=true

- Alexa be more human: https://www.cnet.com/html/feature/amazon-alexa-echo-inside-look/

- Cortana writers, “What did we get ourself into?”: https://medium.com/microsoft-design/what-did-we-get-ourselves-into-36ddae39e69b

- Paper “Alexa is my new BFF” (Purrington): https://www.researchgate.net/publication/316612010_Alexa_is_my_new_BFF_Social_Roles_User_Satisfaction_and_Personification_of_the_Amazon_Echo

- Computers As Social Actors (CASA): https://en.wikipedia.org/wiki/Computers_are_social_actors

- Paper “My Roomba is Rambo” (Ja-Young Sung): https://link.springer.com/chapter/10.1007/978-3-540-74853-3_9

- Interview with Judith Shulevitz, “Alexa doesn’t love you”: https://www.theatlantic.com/membership/archive/2018/10/alexa-doesnt-love-you/573043/

- Paper “Re/Framing Virtual Conversational Partners: A Feminist Critique and Tentative Move Towards a New Design Paradigm” (Sheryl Brahnam, Margaret Weaver): https://link.springer.com/chapter/10.1007/978-3-319-20898-5_17

- Nora Kahn, Glass Beads Journal: “I need it to forgive me”: https://www.glass-bead.org/article/i-need-it-to-forgive-to-me/?lang=enview